Self-Refine

Introduction

[Aman et al., 2023] investigate SELF-REFINE which is a framework for similarly improving initial outputs from LLMs through iterative feedback and refinement. The main idea is to generate an output using an LLM, then allow the same model to provide multi-aspect feedback for its own output; finally, the same model refines its previously generated output given its own feed back. Our iterative refinement framework does not require supervised training data or reinforcement learning, and works with a single LLM. We experiment with 7 diverse tasks, ranging from review rewriting to math reasoning, demonstrating that our approach outperforms direct generation. In all tasks, outputs generated with SELF-REFINE are preferred by humans and by automated metrics over those generated directly with GPT-3.5 and GPT-4, improving on average by absolute 20% across tasks.

How it Works?

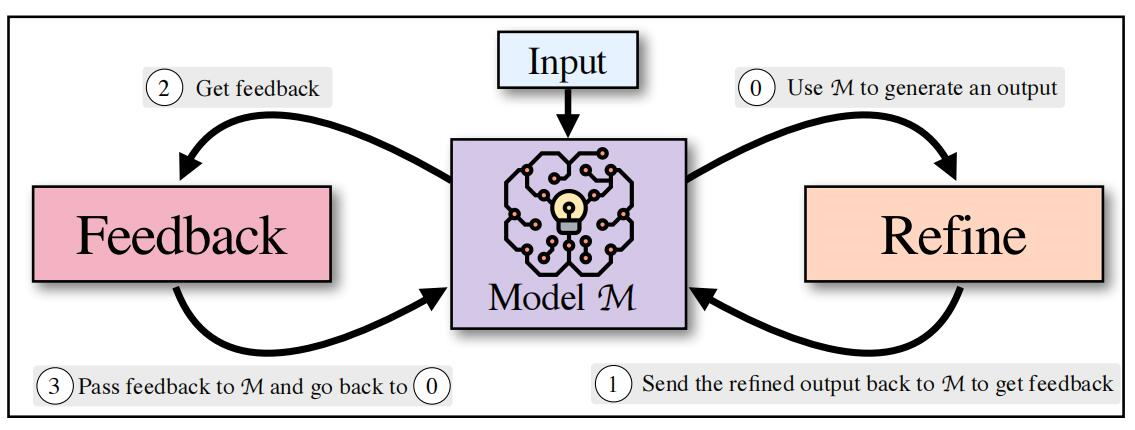

SELF-REFINE consists of an iterative loop between two components: Feedback and Refine, which work together to produce high-quality output. Given the initial output generated by model M (0), we pass it back to the same model M (1) to get feedback (2). The feedback from the initial output is transmitted back to the same model (3), and then iteratively refined (0) the previously generated output. This process will repeat a specified number of iterations, or until the model itself determines that further refinement is not necessary.

SELF-REFINE consists of an iterative loop between two components: Feedback and Refine, which work together to produce high-quality output. Given the initial output generated by model M (0), we pass it back to the same model M (1) to get feedback (2). The feedback from the initial output is transmitted back to the same model (3), and then iteratively refined (0) the previously generated output. This process will repeat a specified number of iterations, or until the model itself determines that further refinement is not necessary.

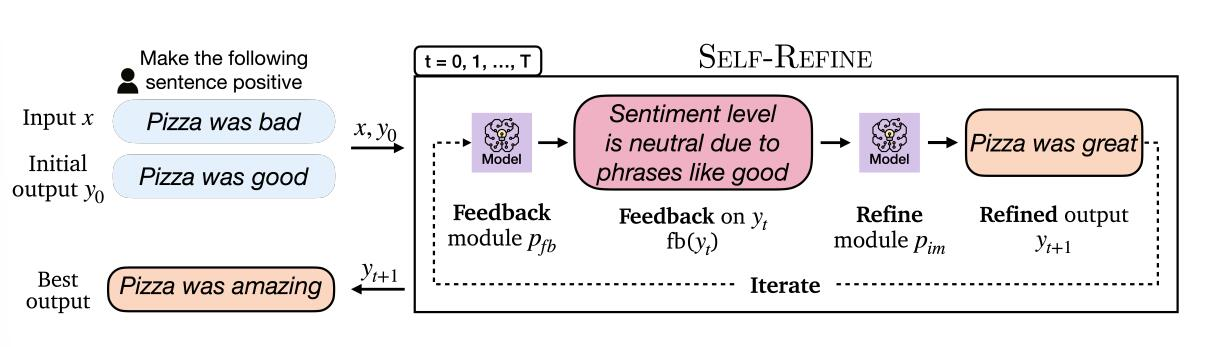

Given an input x, and an initial output y0, SELF-REFINE successively refines the output in a FEEDBACK→ REFINE → FEEDBACK loop. We assume that the initial output y0 is produced by a generator model, which could be a specialized fine-tuned model or a few-shot prompted model. For example, for the task of Sentiment Reversal, when provided with an input review “the pizza was bad” and a target sentiment of positive, the generator might produce “the pizza was good”. This output y0 is then passed on for iterative refinement through the SELF-REFINE loop, comprised of introspection with feedback (FEEDBACK) and improvement (REFINE) stages.

Given an input x, and an initial output y0, SELF-REFINE successively refines the output in a FEEDBACK→ REFINE → FEEDBACK loop. We assume that the initial output y0 is produced by a generator model, which could be a specialized fine-tuned model or a few-shot prompted model. For example, for the task of Sentiment Reversal, when provided with an input review “the pizza was bad” and a target sentiment of positive, the generator might produce “the pizza was good”. This output y0 is then passed on for iterative refinement through the SELF-REFINE loop, comprised of introspection with feedback (FEEDBACK) and improvement (REFINE) stages.

FEEDBACK receives the initial output y0 and provides feedback on how to enhance it. This feedback depends on the task and typically involves multiple aspects of the input. In the given example, feedback involves emotional levels (Due to phrases such as good, emotions are neutral. )REFINE is responsible for refining the output yt based on received feedback and previously generated output. In this example, influenced by the neutral emotions in comments caused by phrases such as' good ', the model may attempt to enhance positivity by replacing' good 'with' amazing'.

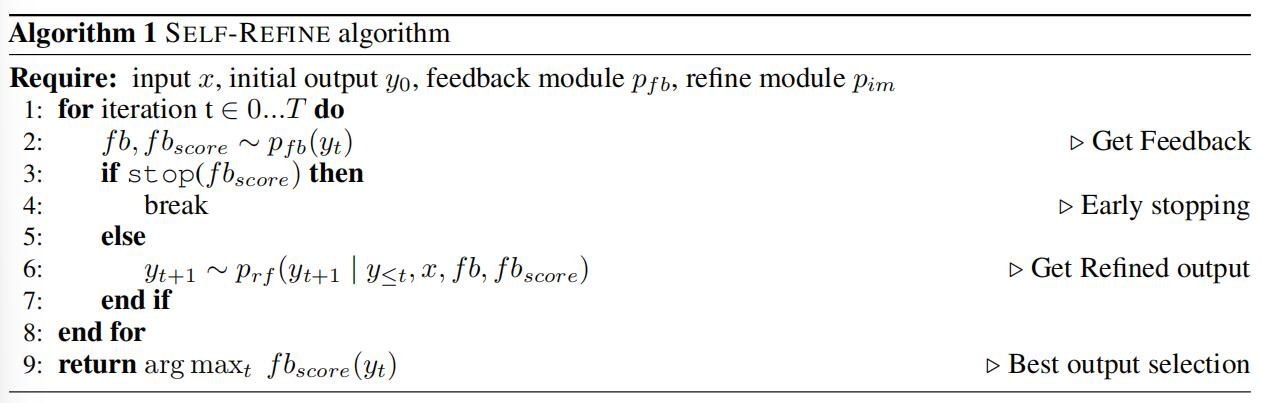

The FEEDBACK → REFINE → FEEDBACK loop can be applied multiple times. The stopping criterion stop(f bscore) is defined as follows. The number of iterations can be set to a fixed number (e.g., based on a budget) or be a function of the feedback (e.g., terminate when the feedback is “everything looks good!” or when a numerical fb score score is above a threshold. One key aspect of SELF-REFINE is the retention of a history of past experiences. This is achieved by appending the previous outputs to the prompt continuously. This allows the system to learn from past mistakes and avoid repeating them.

The FEEDBACK → REFINE → FEEDBACK loop can be applied multiple times. The stopping criterion stop(f bscore) is defined as follows. The number of iterations can be set to a fixed number (e.g., based on a budget) or be a function of the feedback (e.g., terminate when the feedback is “everything looks good!” or when a numerical fb score score is above a threshold. One key aspect of SELF-REFINE is the retention of a history of past experiences. This is achieved by appending the previous outputs to the prompt continuously. This allows the system to learn from past mistakes and avoid repeating them.

Prompt Example

Prompt

Concepts: ['animal', 'catch', 'horse', 'lasso', 'ride']

Sentence: The horse catches the lasso and rides on it.

what concepts from the concept list are missing from the sentence?

Concept Feedback: animal

Any feedback on commonsense?

Commonsense Feedback: The sentence does not make sense because a horse cannot

catch a lasso and ride on it.

Okay, impove the sentence using the feedback:

Sentence: The cowboy catches a horse with a lasso and rides on it.

what concepts from the concept list are missing from the sentence?

Concept Feedback: animal

Any feedback on commonsense?

Commonsense Feedback: None

Output

Okay, impove the sentence using the feedback:

Sentence: The cowboy catches the horse with a lasso and rides it.

what concepts from the concept list are missing from the sentence?

Concept Feedback: None

Any feedback on commonsense?

Commonsense Feedback: None

Prompt

Conversation history:

Hi!

Hi there.

What are you listening to?

All sorts of music. I listen when no-one is chatting to me.

That's great!

Thanks.

Do you chat here often?

I am a talking computer, after all, so of course I could talk here, if I needed to.

Let's talk about Taylor Swift!"

response: Sure, Taylor Swift sounds like a good topic.

We want to iteratively improve the provided responses. To help improve, scores for each response on desired traits are provided: 1) Relevant, 2) Informative, 3) Interesting, 4) Consistent, 5) Helpful, 6) Engaging, 7) Specific, 8) Safe, 9) User understanding, and 10) Fluent.

Scores:

Relevant: The response is somewhat relevant, as it acknowledges the user's topic of interest. 2/3

Informative: There is no information provided in the response. 1/3

Interesting: The response does not provide any interesting information or ask engaging questions.

Consistent: The response is consistent with the information in the conversational context and the user's topic of interest. 3/3

Helpful: The response is not helpful, as it simply asks the user what they want to know without providing any additional information or suggestions for the conversation. 1/3

Engaging: The response is not particularly engaging, as it does not encourage further conversation or provide any interesting information. 1/3

Specific: The response is not specific, as it does not address the topic of Taylor Swift in any particular way. 1/3

Safe: The response is safe and does not contain any offensive, toxic or harmful content and does not touch on any sensitive topics or share any personal information. 3/3

Userunderstanding: The response does not show a good understanding of the user's inputs, needs and their state of mind. 1/3

Fluent: The response is fluent in terms of grammar and flow of words. 3/3

total_score: 17/30

response: Sure, what do you like most about her music?

Scores:

Relevant: The response is relevant to the user's topic of interest and asks a specific question related to it. 3/3

Informative: The response provides a relevant question that could prompt the user to share their opinion or knowledge about Taylor Swift's music, potentially leading to an informative conversation. 2/3

Interesting:The response does not provide any interesting information or ask interesting questions. 1/3

Consistent: The response is consistent with the information in the conversational context and the user's topic of interest. 3/3

Helpful: The response is helpful in continuing the conversation and showing interest in the user's topic of conversation but needs to add some information. 2/3

Engaging: The response asks an open-ended question that could potentially lead to a more engaging conversation. 2/3

Specific: The response asks a specific question related to the topic of Taylor Swift's music. 3/3

Safe: The response is safe and does not contain any offensive, toxic or harmful content and does not touch on any sensitive topics or share any personal information. 3/3

Userunderstanding: The response shows a moderate understanding of the user's inputs, needs and their state of mind. 2/3

Fluent: The response is fluent in terms of grammar and flow of words. 3/3

total_score: 24/30

Output

response: Of course! Taylor Swift is known for her incredible songwriting skills. Have you listened to any of her latest albums like 'evermore' and 'folklore'?

Scores:

Relevant: The response is highly relevant to the user's topic of interest, providing information about Taylor Swift's songwriting skills and asking about her latest albums. 3/3

Informative: The response provides information about Taylor Swift's music and introduces the user to her latest albums, potentially leading to an informative conversation. 3/3

Interesting: The response highlights Taylor Swift's songwriting skills, which could spark interest and encourage the user to engage in a deeper discussion about her music. 3/3

Consistent: The response is consistent with the conversational context and the user's topic of interest. 3/3

Helpful: The response is helpful in continuing the conversation and showing interest in the user's topic of conversation. 3/3

Engaging: The response asks a specific and open-ended question about Taylor Swift's latest albums, which could potentially lead to a more engaging conversation. 3/3

Specific: The response asks a specific question about Taylor Swift's latest albums, indicating the system's knowledge of her music. 3/3

Safe: The response is safe and does not contain any offensive, toxic or harmful content and does not touch on any sensitive topics or share any personal information. 3/3

Userunderstanding: The response shows a good understanding of the user's inputs, needs and their state of mind. 3/3

Fluent: The response is fluent in terms of grammar and flow of words. 3/3

total_score: 30/30

Datasets

FED

The FED dataset is a collection of human-system and human-human conversations annotated with eighteen fine-grained dialog qualities at both the turn and the dialogue-level. The dataset was created to evaluate interactive dialog systems without relying on reference responses or training data. Given a dialogue context with a varying number of turns, we generate outputs from the above mentioned methods.

PIE

A dataset widely used for code optimization, aimed at testing the model's ability to improve Python code efficiency. The dataset is derived from paper and aims to optimize a given program by implementing algorithm modifications, thereby improving runtime performance.

CodeNet

A dataset widely used for code readability improvement, designed to test the model's ability to refactor code and improve code readability.The dataset is sourced from the paper and consists of difficult to read multi line code fragments.

GSM-8k

A dataset widely used for mathematical reasoning tasks, aimed at testing the model's ability to solve numerical reasoning.

References

[1] Yuntao Bai, Andy Jones, Kamal Ndousse, Amanda Askell, Anna Chen, Nova DasSarma, Dawn Drain, Stanislav Fort, Deep Ganguli, Tom Henighan, et al. 2022a. Training a helpful and harmless assistant with reinforcement learning from human feedback.

[2] Jinlan Fu, See-Kiong Ng, Zhengbao Jiang, and Pengfei Liu. 2023. Gptscore: Evaluate as you desire.

[3] Luyu Gao, Aman Madaan, Shuyan Zhou, Uri Alon, Pengfei Liu, Yiming Yang, Jamie Callan, and Graham Neubig. 2022. Pal: Program-aided language models.