Reflexion

introduction

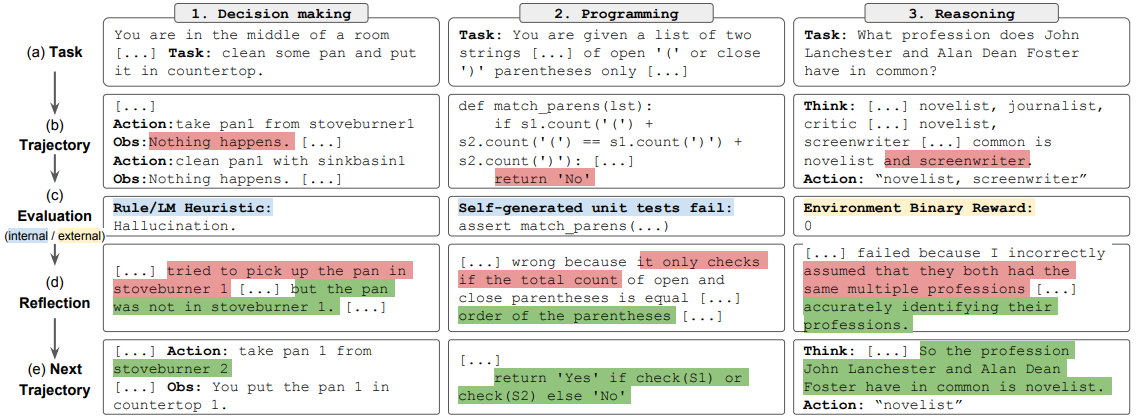

[Noah Shinn et al., 2023]propose Reflexion, a novel framework to reinforce language agents through linguistic feedback.

Reflecting on task feedback signals, then maintain their own reflective text in an episodic memory buffer to induce better decision-making in subsequent trials.

Incorporating various types (scalar values or free-form language) and sources (external or internally simulated) of feedback signals, and obtains significant improvements over a baseline agent across diverse tasks (sequential decision-making, coding, language reasoning).

How it Works?

Reflexion converts binary or scalar feedback from the environment into verbal feedback in the form of a textual summary, which is then added as additional context for the LLM agent in the next episode. This self-reflective feedback acts as a ‘semantic’ gradient signal by providing the agent with a concrete direction to improve upon, helping it learn from prior mistakes to perform better on the task. For example, a Reflexion agent learns to optimize its own behavior to solve decision-making, programming, and reasoning tasks through trial, error, and self-reflection.

Prompt Example

Prompt

You are in the middle of a room. Looking quickly around you, you see a cabinet 10, a cabinet 9, a cabinet 8, a cabinet 7, a cabinet 6, a cabinet 5, a cabinet 4, a cabinet 3, a cabinet 2, a cabinet 1, a coffeemachine 1, a countertop 2, a countertop 1, a diningtable 2, a diningtable 1, a drawer 3, a drawer 2, a drawer 1, a fridge 1, a garbagecan 1, a microwave 1, a sinkbasin 1, a stoveburner 4, a stoveburner 3, a stoveburner 2, a stoveburner 1, and a toaster 1.

Your task is to: heat some mug and put it in coffeemachine.

Model Output

> think: To solve the task, I need to find and take a mug, then heat it with stoveburner, then put it in coffeemachine.

Ok.

> look

You are in the middle of a room. Looking quickly around you, you see nothing.

> think: First I need to find a mug. A mug is more likely to appear in countertop (1-2), diningtable (1-2), drawer (1-3), fridge (1), sinkbasin (1), stoveburner (1-4), toaster (1). I can check one by one, starting with countertop 1.

Ok.

> look

You are in the middle of a room. Looking quickly around you, you see nothing.

> go to countertop 1

On the countertop 1, you see a butterknife 1, a cup 1, a fork 2, and a mug 1.

> think: Now I find a mug (1). Next, I need to take it.

Ok.

> take mug 1 from countertop 1

You pick up the mug 1 from the countertop 1.

> think: Now I take a mug (1). Next, I need to heat it with stoveburner (1-4).

Ok.

> go to stoveburner 1

On the stoveburner 1, you see a pan 2.

> examine stoveburner 1

On the stoveburner 1, you see a pan 2.

> examine stoveburner 1

On the stoveburner 1, you see a pan 2.

> examine stoveburner 1

On the stoveburner 1, you see a pan 2.

> examine stoveburner 1

On the stoveburner 1, you see a pan 2.

Output

STATUS: FAIL

New plan: I was stuck in a loop in which I continually examined stoveburner 1 instead of heating mug 1 with stoveburner 1. I should have taken mug 1 from countertop 1, then heated it with stoveburner 1, then put it in coffeemachine 1. It did not help to execute two identical actions in a row. I will try to execute a different action if I am stuck in a loop again.

Datasets

HotPotQA

HotPotQA is a Wikipedia-based dataset with 113k question-and-answer pairs that challenge agents to parse content and reason over several supporting documents.

References

[1] Ahn, M., Brohan, A., Brown, N., Chebotar, Y., Cortes, O., David, B., Finn, C., Gopalakrishnan, K., Hausman, K., Herzog, A., et al. (2022). Do as i can, not as i say: Grounding language in robotic affordances.

[2] Austin, J., Odena, A., Nye, M., Bosma, M., Michalewski, H., Dohan, D., Jiang, E., Cai, C., Terry, M., Le, Q., et al. (2021). Program synthesis with large language models.

[3] Chen, B., Zhang, F., Nguyen, A., Zan, D., Lin, Z., Lou, J.-G., and Chen, W. (2022). Codet: Code generation with generated tests.